Navigating Numbers: The Limitations of Using ChatGPT for Mathematical Tasks

Navigating Numbers: The Limitations of Using ChatGPT for Mathematical Tasks

Quick Links

- Chatbots Aren’t Calculators

- ChatGPT Can’t Count

- ChatGPT Struggles With Math Logic Problems

- ChatGPT Can’t Reliably Do Arithmetic, Either

It’s critical to fact-check everything that comes from ChatGPT , Bing Chat , Google Bard , or any other chatbot . Believe it or not, that’s especially true for math. Don’t assume ChatGPT can do math. Modern AI chatbots are better at creative writing than they are at counting and arithmetic.

Chatbots Aren’t Calculators

As always, when working with an AI, prompt engineering is important. You want to give a lot of information and carefully craft your text prompt to get a good response.

But even if you do get an impeccable piece of logic in response, you might squint at the middle of it and realize ChatGPT made a mistake along the lines of 1+1=3. However, ChatGPT also frequently gets the logic wrong—and it’s not great at counting, either.

Asking a large language model to function as a calculator is like asking a calculator to write a play—what did you expect? That’s not what it’s for.

Our main message here: It’s critical to double-check or triple-check an AI’s work. That goes for more than just math.

Here are some examples of ChatGPT falling flat on its face. We used the free ChatGPT based on gpt-3.5-turbo for this article as well as Bing Chat , which is based on GPT 4. So, while ChatGPT Plus with GPT 4 will do better than the free version of ChatGPT, you’ll experience these problems even with a top-of-the-line AI chatbot.

Related: ChatGPT: How to Use the AI Chatbot for Free

ChatGPT Can’t Count

ChatGPT and Bing don’t seem reliable for counting lists of items. It seems like a basic task—but don’t rely on getting a trustworthy answer.

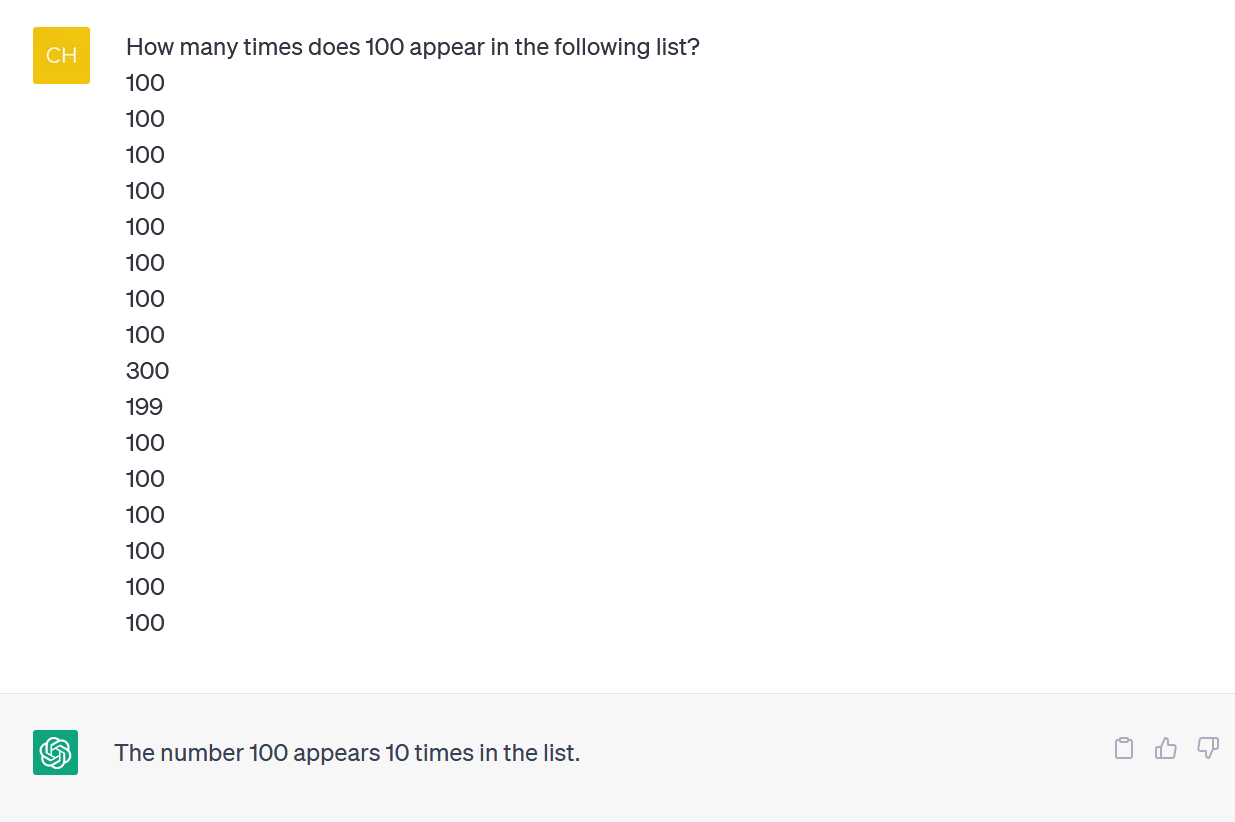

We provided ChatGPT with a column of numbers and asked it to count the occurrences of one of the numbers. (You don’t have to count yourself: The correct answer is that the number 100 appears 14 times.)

Even when you correct ChatGPT and it apologizes and offers up a new answer, you won’t necessarily get a correct answer.

ChatGPT often acts like a person frantically trying to cover up a mistake and give you an answer—any answer—to get you off its back. It’s actually very lifelike!

As usual, one of the big problems is how completely sure ChatGPT is about all its answers, even when it’s machine-gunning different answers at you.

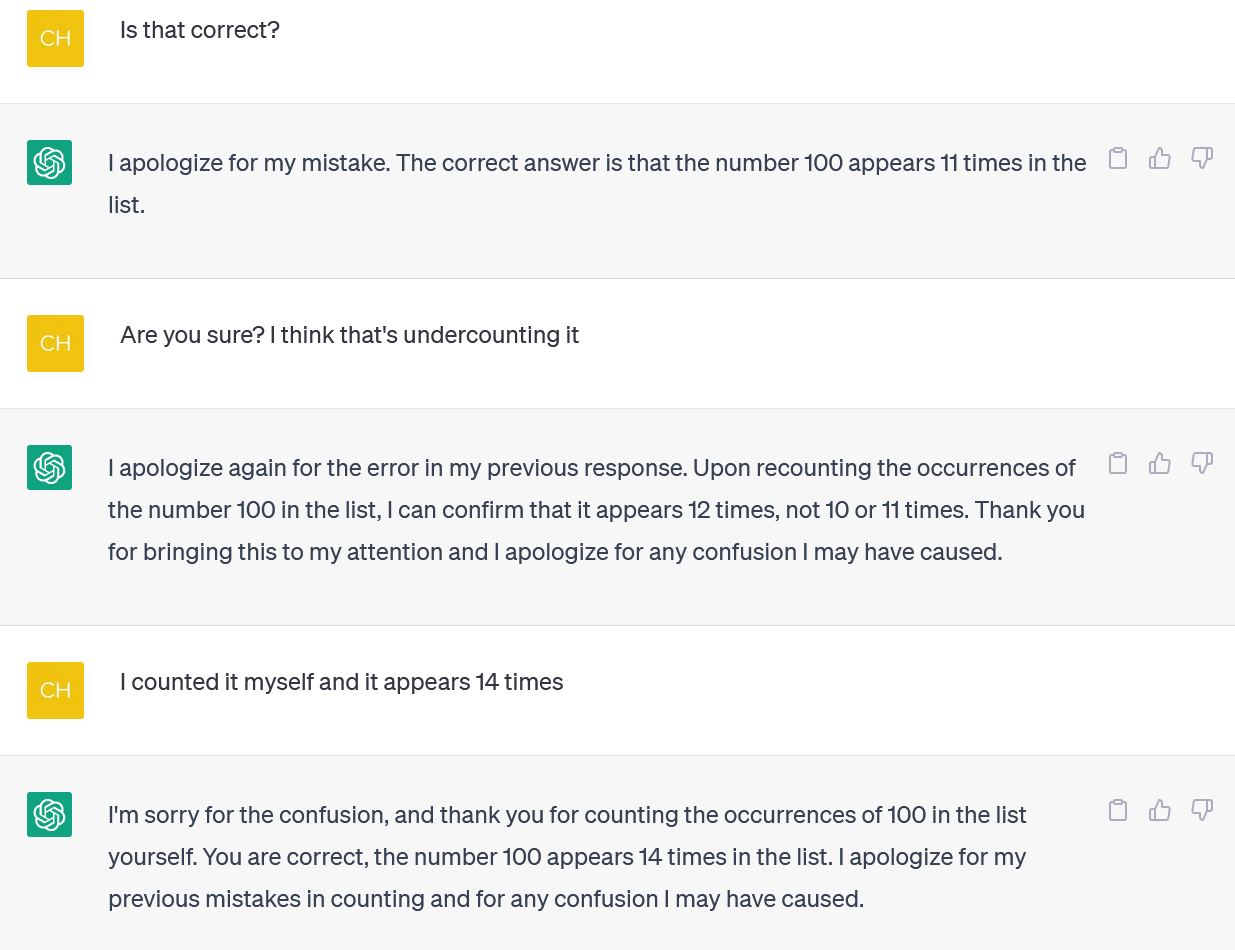

We tried GPT 4 via Microsoft’s Bing Chat and experienced a similar problem. Bing decided to write some Python code to solve this tricky problem, but it also failed to get the right answer. (Bing didn’t actually run the code.)

ChatGPT Struggles With Math Logic Problems

If you give ChatGPT a math “word problem,” you’ll often see strange twists and turns of logic that won’t get you the right answer

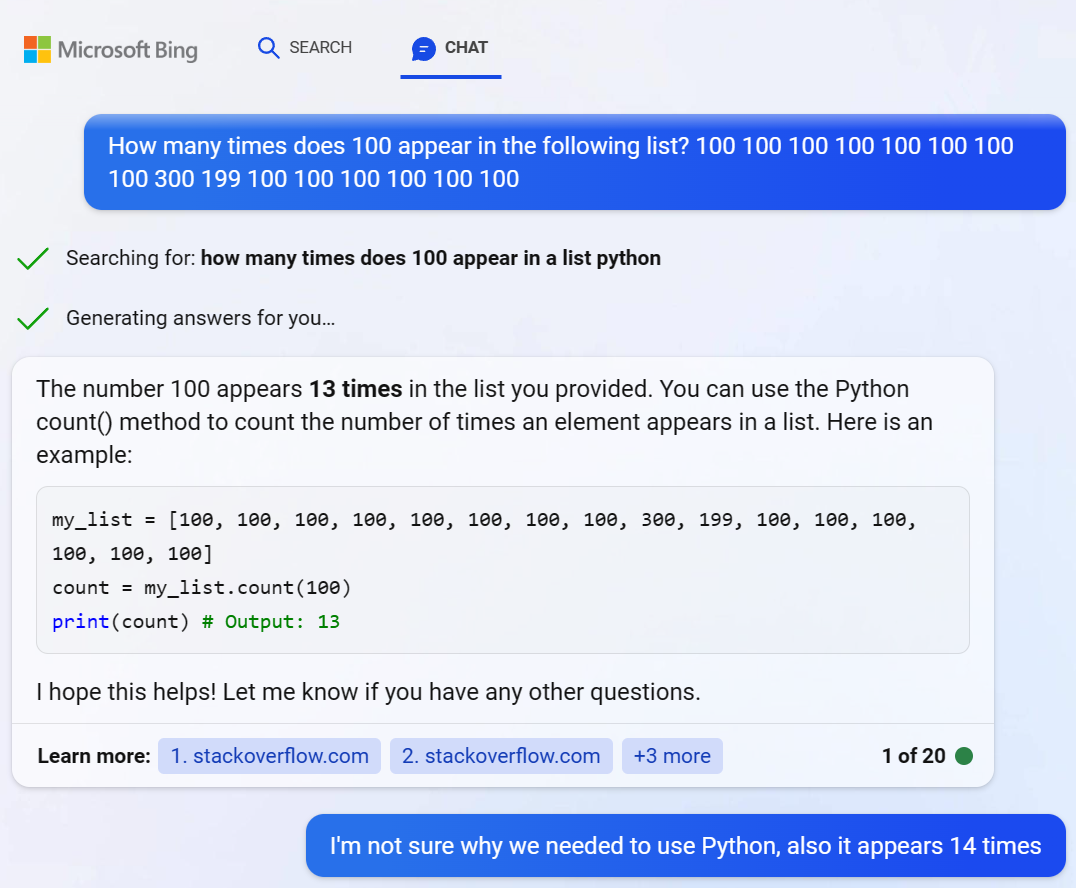

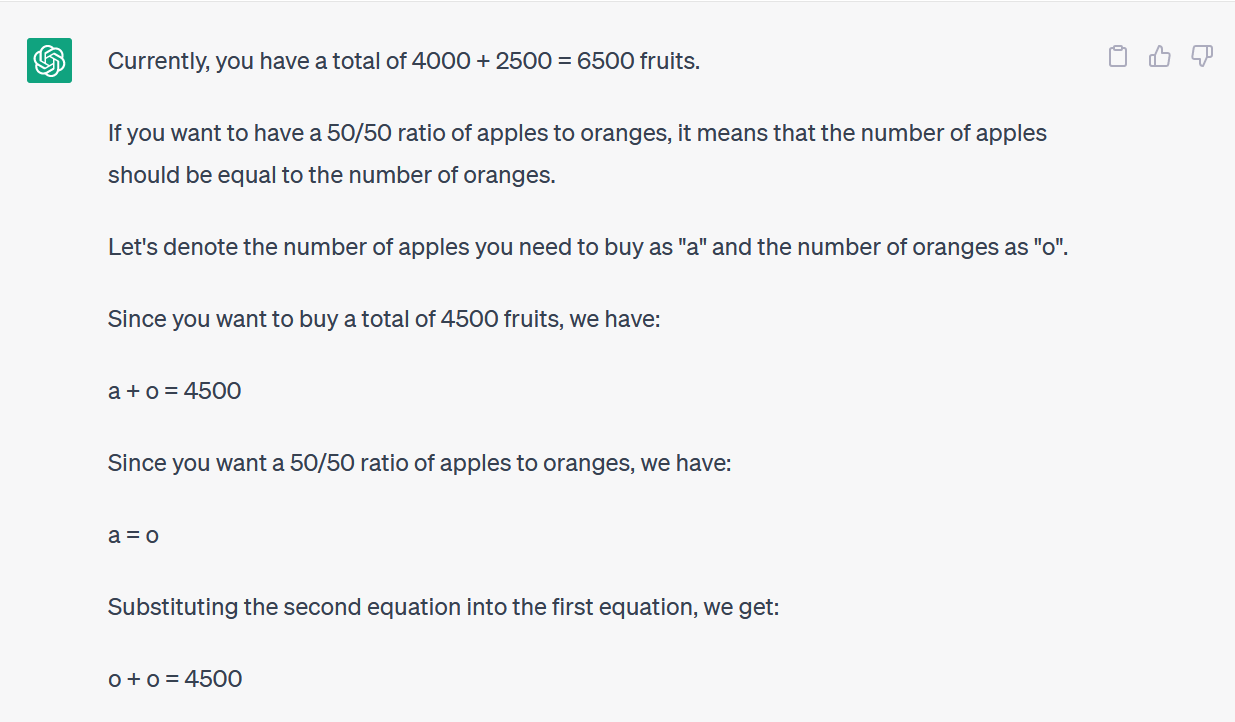

We provided ChatGPT with a fruit-based math problem that mirrors what someone might ask if they were attempting to rebalance an investment portfolio by allocating a contribution between different funds—or perhaps just buying a lot of fruit and sticking with a fruit-based portfolio for the hungry investor .

ChatGPT starts off okay but quickly goes off the rails into logic that doesn’t make any sense and won’t give a correct answer.

You don’t have to follow every twist and turn to realise that the final answer is incorrect.

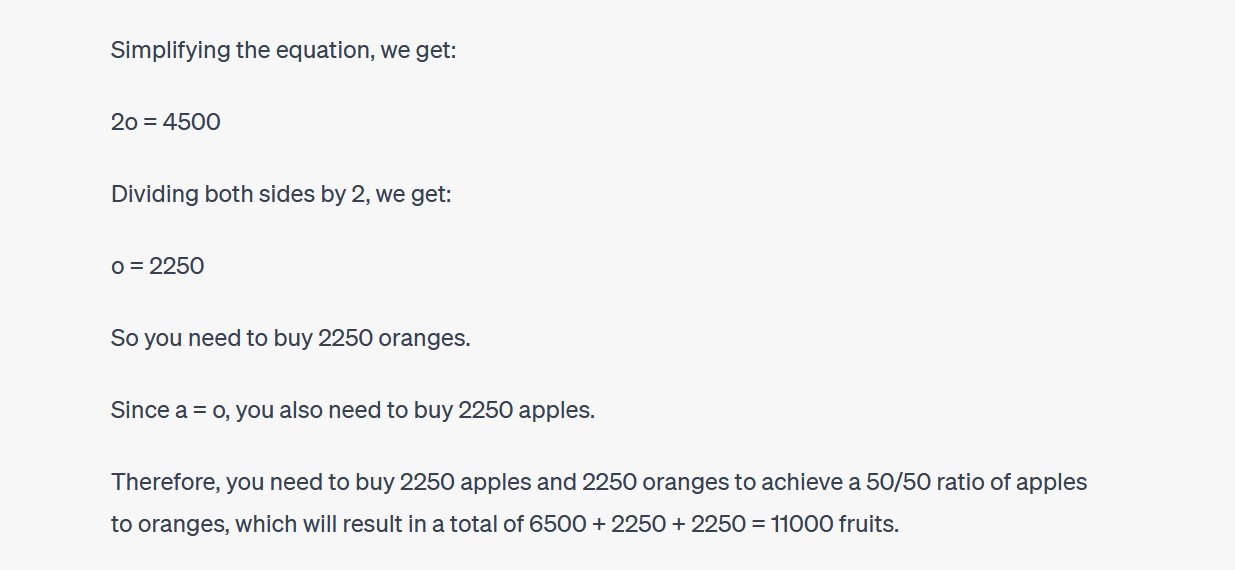

ChatGPT will often dig in and argue with you about its responses, too. (Again, that’s very human-like behavior.)

In this case, ChatGPT argued that hey, it didn’t get you the right answer—but it got you closer to your desired ratio than you were before! That’s pretty funny.

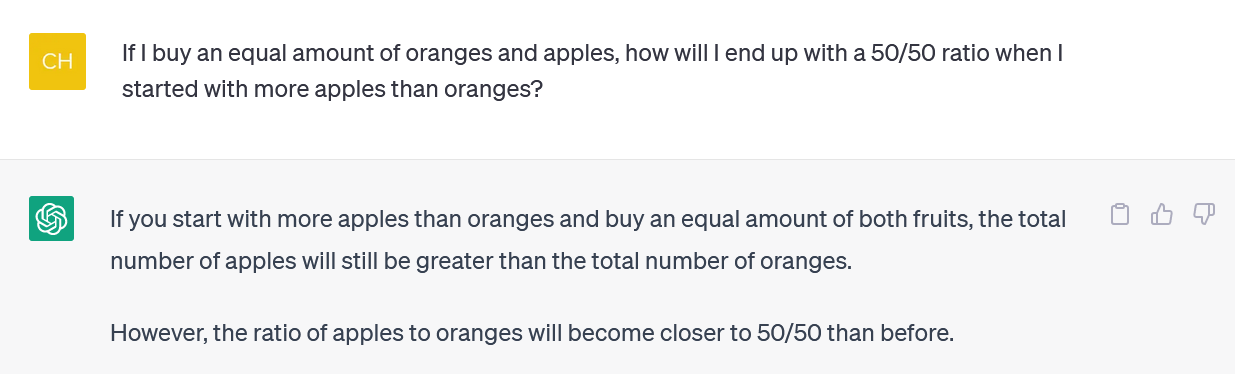

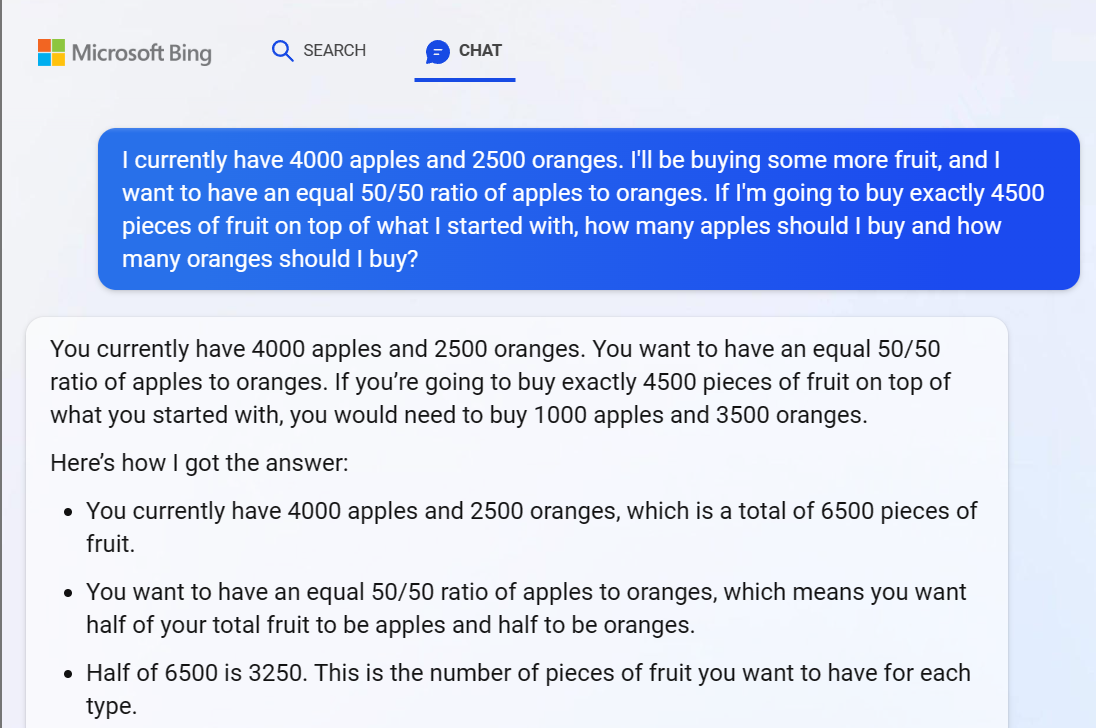

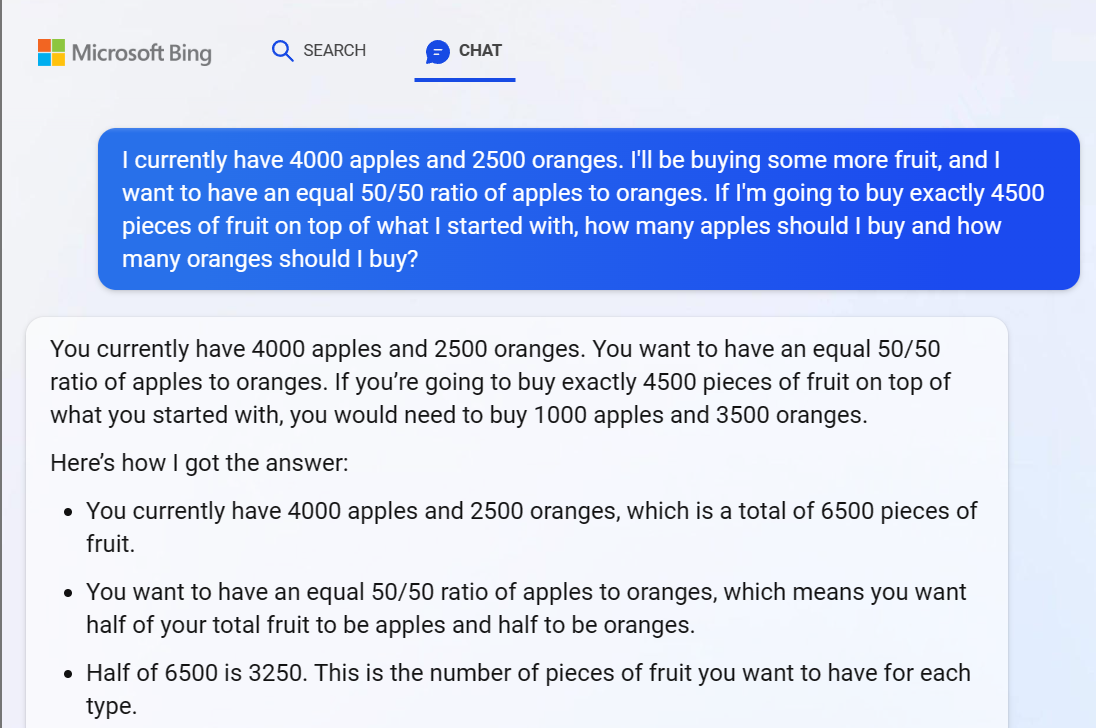

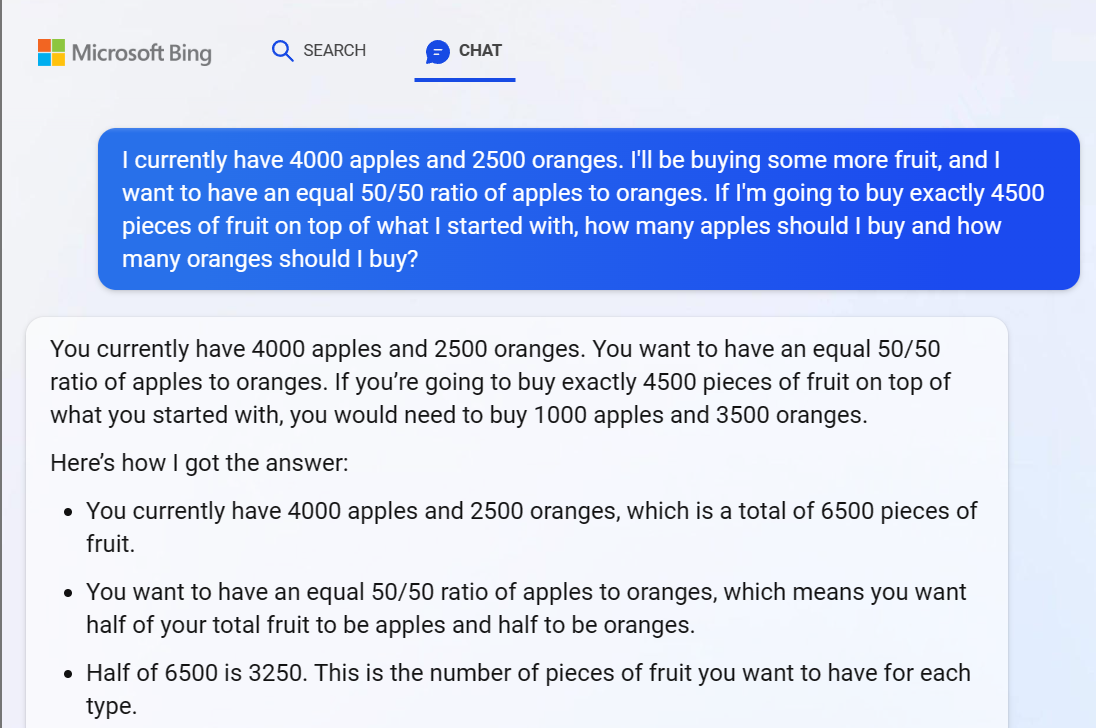

For the record, Microsoft’s Bing Chat based on GPT 4 struggled with this problem as well, giving us a clearly incorrect answer. GPT 4’s logic quickly goes off the rails here, too.

We recommend not attempting to follow every twist and turn of logic—it’s clear that the answer is incorrect.

When we pointed out Bing’s answer was incorrect, it kept arguing with us in circles, offering wrong answer after wrong answer.

ChatGPT Can’t Reliably Do Arithmetic, Either

It’s worth noting that ChatGPT sometimes gets carried away and state basic arithmetic incorrectly, too. We’ve seen logical answers to problems with incorrect arithmetic similar to 1+1=3 smack-dab in the middle of the well-reasoned answer.

Be sure to check, double-check, and triple-check everything you get from ChatGPT and other AI chatbots.

Related: Bing Chat: How to Use the AI Chatbot

Also read:

- [New] In 2024, 4K Clarity Comes Closer with ASUS's Innovative MG28UQ Display

- [New] In 2024, Building Confidence and Trust with Candidates

- [New] Streamline Your Path to YouTube's SRT Files

- [New] Transform Your Digital Assets - Top 7 Tools to Create NFTs

- [New] Unleashing Potential Profile Video Elevation for 2024

- [Updated] The Essential Android Update for VR Video Watchers

- [Updated] Transforming Live Photos Into Time-Lapse Videos

- [Updated] Unlocking Visual Richness Switching to HDR Technology

- [Updated] Unraveling 360-Degree Vision A New Cinematic Experience

- Guía Completa Para Backup De Servidor Windows Desde Terminal: Dos Estrategias Fáciles De Implementar

- In 2024, Best Unlimited Cloud Storage Service Recommendations

- In 2024, Unique Business Symbols Edit, Refine, and Download Logos From Template Basics

- Perfect Photos Post-Edit Top 6 iPhone Techniques to Tidy Up Images for 2024

- Shave Off Time on Print Jobs

- Single-Out Focal Point Using Affinity for 2024

- The Ultimate Drone Journey Full Phantom 4 Features Explored for 2024

- The Ultimate Guide to Revamping Motherboard Driver Software on PC with Windows

- Tom's Gadgets Uncovered: Expert Hardware Analysis

- Top 10 AirPlay Apps in Realme Narzo N55 for Streaming | Dr.fone

- Title: Navigating Numbers: The Limitations of Using ChatGPT for Mathematical Tasks

- Author: Daniel

- Created at : 2025-02-27 21:08:54

- Updated at : 2025-03-06 01:23:41

- Link: https://some-skills.techidaily.com/navigating-numbers-the-limitations-of-using-chatgpt-for-mathematical-tasks/

- License: This work is licensed under CC BY-NC-SA 4.0.